Production process bottlenecks are costly and impede throughput, which is why manufacturers consistently aim to identify and eliminate them. Insights from line managers and work centers can inform these efforts, but to effectively spot and continually monitor bottlenecks, manufacturers need a reliable system to visualize channel production data. This article will cover how leading manufacturers use a modern data platform and business intelligence (BI) tools to spot and monitor bottlenecks in the production process.

How to Build a System to Monitor Production Bottlenecks

Start with a simple goal.

It’s imperative to start with a simple goal. For example, the initial goal might be – “Develop an automated dashboard that allows managers to see actual and theoretical throughput capacity.” This goal isn’t overly broad and notice that you’re not initially focused on the outcomes of “improving throughput” or “eliminating bottlenecks.” While those are the ultimate goals, you need to first set a reasonable goal that allows you to start leveraging data for an automated view into production capacity.

Find and collect the pertinent data.

With an initial goal in mind, you can consider the available data pertinent to your efforts. You can expect this to vary considerably, based on how the organization is currently capturing production-related data. For example, some manufacturers will have shop floor data collection systems to consistently capture data based on the production activities on the floor. Some manufacturers will have data captured through their ERP, whether it’s manually input or automatically ingested through an integration with a shop floor data collection system.

Think about the systems you have in place and ensure you have a basic understanding of where the key data resides. Once you have that list, talk with line managers and other knowledge workers to understand any limitations of the data. For example:

- What is the completeness of the data?

- What is the longitudinal coverage?

- Are there any data points that we’re not capturing, that are critical to understanding capacity and throughput?

Bring all of the necessary data into a data lakehouse.

With an understanding of where the key data resides, you’re ready to consider how to deliver insights. At this stage, it’s critical to design a dashboard solution that is as automated as possible. By automating the process of channeling raw data into curated insights, organizations preserve time for analysis, decision-making, and action. These are the fundamental ways that value is created through data initiatives, commonly, time is wasted through relying on a manual process to refresh reports with the most recent data.

There are three pillars to a performant, automated business intelligence system:

- A modern data platform to house raw data

- Data modeling to build in metric definitions

- A dashboard platform like Power BI – to serve insights

The data platform is of particular importance. This could be a data warehouse or a more modern data lakehouse. Think of it as a landing spot for raw data, with data pipelines connected to each source system (shop floor collection system, ERP, CRM, etc.). These pipelines allow the most recent data to automatically flow into the data lakehouse on a regular schedule.

Crucially, the data platform also allows businesses to combine data from disconnected systems. Consider a scenario where you need to pull data from system A and system B in order to get an accurate view of throughput. That’s what the data platform provides, and through data modeling, you can codify specific metric definitions which serve to take the most recent raw data and convert it into insights to understand performance. With all three of these pillars in place, companies can implement a process whereby no time is spent preparing and serving insights. Rather, the BI system presents insights each day, allowing the business to focus on what the data is saying and how to make adjustments to improve key metrics.

Determine and define the metrics that will be used to measure success.

With a data platform in place, and all the raw data available, businesses must determine the logic for specific metric definitions. Chances are these exist already, but if not, time will be required to talk with SMEs and confirm how key metrics should be defined. Once this is complete, these definitions are coded into the data models – which serve to update dashboards with the most recent data.

Returning to your goal of visualizing throughput, you need to determine an accurate theoretical throughput capacity for each machine, to compare against actuals. Next, you need to verify that the definition of throughput that you’ll use is correct. A simple definition would be:

Throughput = Total number of acceptable units produced / specified time frame (hour, shift, day, etc.)

However, be sure to discuss your specific definitions, and account for any nuances that need to be considered based on how your company measures throughput. Needless to say, you may want to have additional metrics beyond throughput. Work in process (WIP), downtime, overall equipment efficiency (OEE), total effective equipment performance (TEEP), capacity utilization, and first-time right (FTR) are just some examples of other metrics to consider measuring to get a sense of where bottlenecks are impeding the production process.

But remember, you shouldn’t try to boil the ocean. Your initial goal is to provide automated reporting to show the actual and theoretical throughput capacity of machines. Bringing in additional metrics will require more time and risks overwhelming users. It’s better to start small, and to limit your first dashboard to 1-3 metrics. Then, as you start collecting feedback from users, you can carefully consider what’s worth adding.

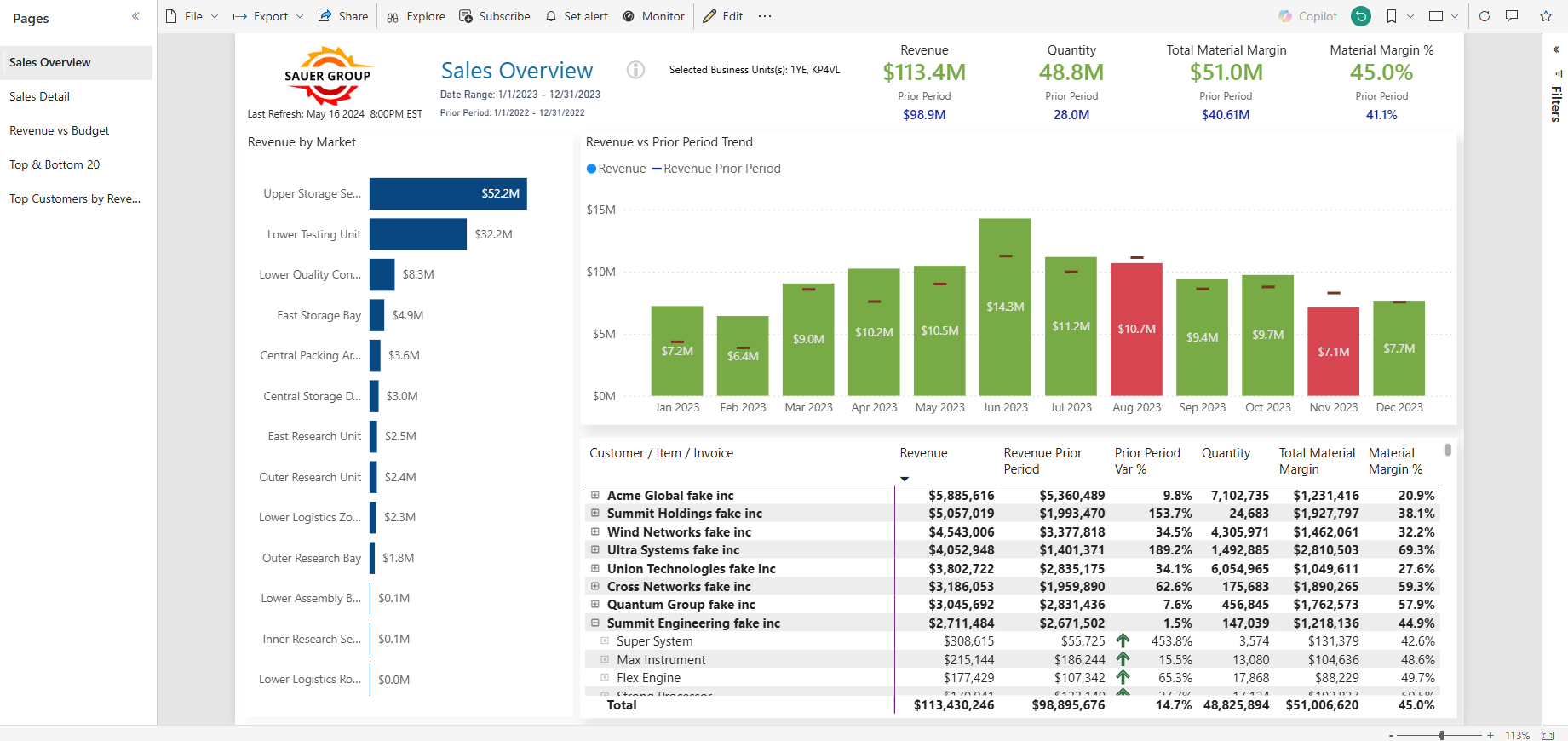

Build automated reports to help you track against goals.

At this stage, you should have the data platform setup, pipelines connected to each of your source systems, and data models with key metric definitions developed in the data warehouse / lakehouse. And with these prerequisites in place, you arrive at the crucial “last mile” of a data initiative – determining how you’ll visualize the data for comprehension, clarity, and action.

There are many best practices when it comes to developing dashboards, but here are the main things you should focus on:

- Start small – don’t overwhelm users with too many metrics and visuals on one page.

- Provide definitions within the dashboard – include metric definitions, so that everyone can easily see how the numbers are determined.

- Go from big to small – highlight the key metrics at the top, followed by more detailed views. This allows users to keep the main goal in mind (improving throughput), while they look at more granular data.

- Base the dashboard around the problem your team is trying to solve. This prevents the dashboard from just being a “report,” and shapes it into a tool – one that’s built to assist with a high-priority strategic objective.

This is just a short list of best practices, and you can find many more here: Dashboard Design to Ensure Adoption. The idea here is to keep the team aligned around the goal, to avoid overwhelming them with too much data (limit noise, increase signal), and to ensure that everyone understands how metrics are being measured – so that you have one version of “the truth.”

Monitor adoption and iterate continuously.

Now that you have an automated dashboard, take time to collect feedback and practice using this new tool to improve business results. There are various ways to monitor usage statistics for dashboards, but informal feedback is just as critical. After releasing a new dashboard, take time to talk with users to understand how they’re using the dashboard, what they like (and don’t like) about the layout, and what they want to see included.

Developing business intelligence isn’t a one-time effort. It’s a continual process of analyzing how the business is leveraging available data to focus teams on the key metrics that determine profitable growth. Priorities and key initiatives change, and sometimes metric definitions change. As priorities shift, expect the specific needs for data to shift as well.

Guiding principles for data projects.

Data is a fundamental element in improving processes, whether it be spotting and monitoring bottlenecks, or improving customer loyalty. Surprisingly, companies usually have the data available but aren’t properly leveraging it to drive improvements. As this guide highlights, it’s important to keep a short list of guiding principles in mind:

- Start small – prove out a use case and build on it to automate more insights.

- Invest in a proper data platform to provide a foundation for analytics.

- Remember that dashboards are built for humans to consume data – what you develop shouldn’t overwhelm or distract from what’s most important.

- Measure your efforts and collect feedback, then use it to iterate. Dashboards should be considered living and breathing entities, and a solid feedback loop with users allows for strong adoption.